Incremental Text to Speech for Neural Sequence-to-Sequence Models using Reinforcement Learning

Efforts towards incremental text to speech (TTS) systems have largely focused on traditional, non-neural architectures 1 2 3. As samples from neural TTS systems get more and more realistic, we delve deeper into what makes the architectures of these systems offline and then address these problems by using a reinforcement learning based framework.

Before we get into the nuts and bolts of it though, perhaps the best place to start is to describe what exactly is meant by an incremental TTS system. Typically, modern TTS architectures process the entire input text before synthesising any audio. At the outset, this might seem odd. After all, the way the first word needs to be pronounced hardly depends on the last, does it? However, consider the sentence “She read the Guide years ago!“. Suppose you had only observed the sub-sentence “She read”. Think about how you might choose to pronounce this. You may come to the conclusion that the last 4 characters could be pronounced in one of two ways: Either to rhyme with the colour ‘red’ to signify the past tense. Or to rhyme with ‘need’, as in “She reads every day”. The only way the correct pronunciation can be known is by observing whether the next character in the sentence is a blank or an ‘s’. This suggests that any algorithm that is designed to synthesise speech incrementally, while reading the text, needs a certain level of intelligence and a simple rule based system might falter.

Now that we know what incremental TTS is, it is natural to wonder why such a system might be desirable. There has been a considerable amount of work on developing streaming, end-to-end architectures for tasks like speech recognition4 5 and machine translation 6 7. Imagine placing an incremental TTS system at the tail end of a pipeline of with these two components. This would enable us to simultaneously translate speech from one language to another! Seems like the stuff of science fiction, doesn’t it?

With this understanding, we are well placed to outline our contributions. In our paper, we propose an approach by which sequence to sequence TTS models can be adapted to work in an online manner. Rather than adopt a rule-based approach8, and motivated by similar work in machine translation7, we propose a reinforcement learning based approach which can adapt it’s policy based on the sentence at hand. But before we get ahead of ourselves, let’s lay some groundwork.

Broad setup

We use a modified version of the Tacotron 2 model9 to demonstrate our approach, tasking it with the conversion of input text into a mel spectrogram. To synthesise the final audio, we simply adapt the inference behaviour of a neural vocoder to work in a purely auto-regressive manner and so, focus on the first part of the pipeline for the remainder of this post.

Out of the box, the Tacotron 2 model has a number of components that render it unsuitable for the incremental setting. Let us begin with the encoder. The broadening receptive field of the convolutional layers and bidirectionality of the encoder are clearly two problem areas. We take the simplistic approach of removing the convolutional layers and replacing the bi-directional encoder with a uni-directional one. Further, we discard the post-net module. One may point out (and rightly so) that these changes could negatively impact the quality of the synthesis. However, these seem necessary for the incremental setting. We illustrate the impact of these modifications on our model with audio samples.

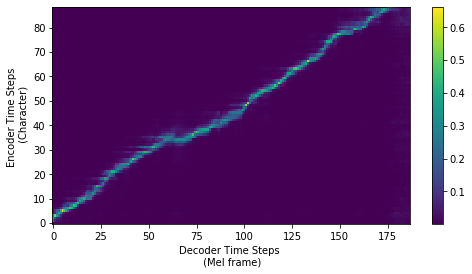

With the offline-ness of these components addressed, it might be tempting to think our work is close to complete. After all, the decoder works in an auto-regressive manner. Unfortunately, the attention mechanism throws an unwieldy spanner in the works. Firstly, the computation of the alignments involves a softmax which suggests the entire sequence needs to have been processed. Secondly, suppose the decoder was at the th step. The purpose of the attention mechanism is precisely to allow it to have access to any of the encoder outputs! This seems to go against the fundamentals of incremental synthesis! However, closer inspection of the actual alignment values suggests an interesting alternative.

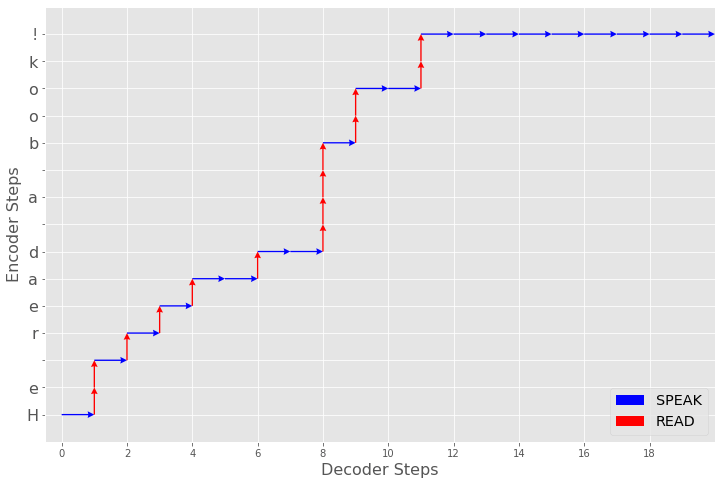

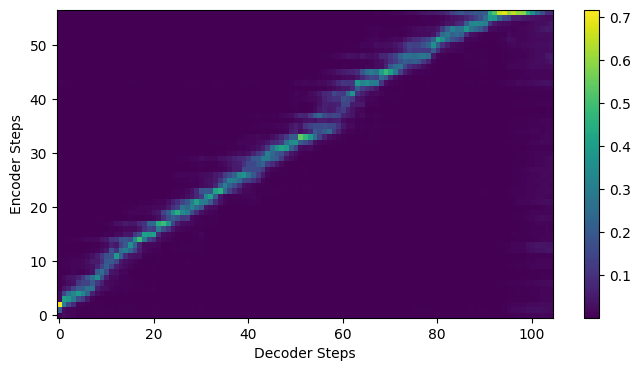

In the figure above, observe that at a particular decoder step, only a small fraction of the alignment weights contribute significantly. Further, these alignments follow a ‘roughly monotonic’ trajectory. This suggests that the model doesn’t actually use the ‘future’ contexts while decoding each time step. Motivated by this observation, we propose to maintain an increasing buffer of input characters at each decoder step. That way, at time step , the decoder has access only to the first characters. Once this subsequence has been completely synthesised, we can incorporate an additional character into our buffer (READ) and then attempt to synthesise that (SPEAK)! The figure below shows one such possible trajectory.

The question then becomes how do we decide when the synthesis of any given subsequence is complete? Or, more philosophically put, how does the model know when to stop talking and instead read? To help make this decision is where we propose to use the reinforcement learning framework.

Proposed agent

In an effort to not make this post prohibitively long, we’re going to assume some basic knowledge of reinforcement learning and refer the uninitiated to Chapter 3 of the excellent book, Reinforcement Learning: An Introduction by Andrew Barto and Richard S. Sutton.

Agent

From the above discussion, it seems only natural to specify the actions available to the agent as being

- READ: Provide the attention mechanism an additional character to attend over. This would constitute a step in the vertical direction in the above figure.

- SPEAK: Decode based on the characters read thus far. This would constitute a step in the horizontal direction in the above figure.

Environment

We use a fully trained modified Tacotron 2 model to provide the agent with the requisite information to be able to make this decision (the observations, ) as well as the appropriate feedback (the rewards, ). We now discuss these components in further detail.

Observations

Intuitively, via this observation vector, we need to provide the agent with enough information so that it can decide whether it has completed generating the spectrograms corresponding to the characters that have been read (in which case a READ action should be performed), or if it is still decoding (in which case a SPEAK action should be selected). With this in mind, we define our observation vector as the concatenation of the following:

- , the attention context vector at the th decoder step, based on the characters read thus far.

- , a fixed length moving window of the latest attention weights

- (during training) or (during evaluation), the most recent mel spectrogram frame.

Returning to our intuition, we see that should provide the agent with enough information to track what has been said, while the attention context and weights enable it to identify what has been read.

Rewards

One would expect a trade-off between the latency incurred during the synthesis and the quality of the synthesised output. We wanted our reward mechanism to mimic this balancing act and so settled on it having two components: representing the delay and which captures the quality.

That’s all been delightfully vague, so let’s get into (some of) the specifics.

The delay component itself is defined as the sum of two terms,

- : This is a local signal which is designed to discourage consecutive READ actions, thereby encouraging the model to interleave the READ and SPEAK actions.

- : This is a global penalty which is incurred at the end of the episode. Geometrically, it represents the proportion of area under the policy trajectory. Given it is a proportion of area, it ranges between 0 and 1. However, let us analyse the extreme cases. A value of 0 corresponds to the unreasonable goal of synthesising audio without reading any text. A value of 1 corresponds to the existing setup of reading the entire input sentence and then synthesising the complete audio. We would like this value to be as low as possible, with the hope that the quality term will ensure it does not not collapse to 0.

Which brings us neatly onto the quality component , which is simply the weighted mean-squared error between the synthesised frame and the ground truth mel spectrogram frame.

We have restricted ourselves to an intuitive description of the rewards here. For a more formal, technical treatment, we refer the reader to our paper.

Terminal Flag

At train time, there are two ways that the episode can terminate:

- All the characters have been read, in which case the agent SPEAKs until all the frames are synthesised. It is then given a cumulative reward for these SPEAK actions.

- All the aligned mel-spectrograms have been consumed, in which case the agent is given an additional penalty equal to the number of unread characters and the episode is terminated.

During inference, the episode runs until our Tacotron 2 model’s stop token condition is triggered.

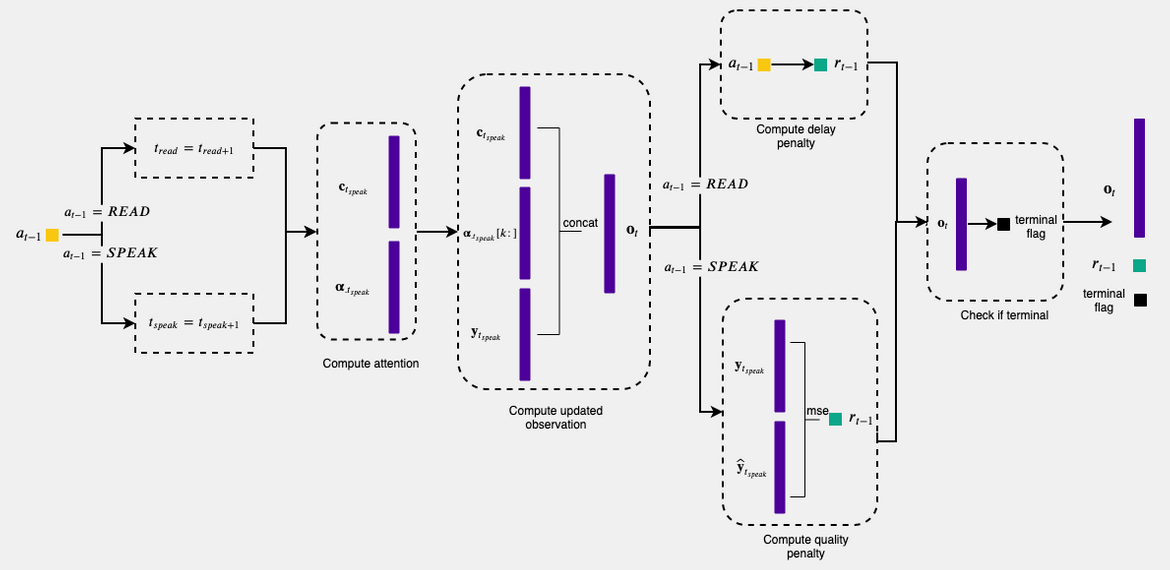

Flow, Setup and Learning

To make the description of the underlying mechanics a little less tedious, we felt a pictorial depiction might help. First up, we present the environment flow.

Depending on the chosen action, the appropriate counter is incremented. Following this, we compute the attention weights and corresponding context based on vectors in the current input buffer. A concatenation of the relevant portions then gives us the observation vector, . The reward is then computed based on the chosen action and finally, we check whether the episode has terminated.

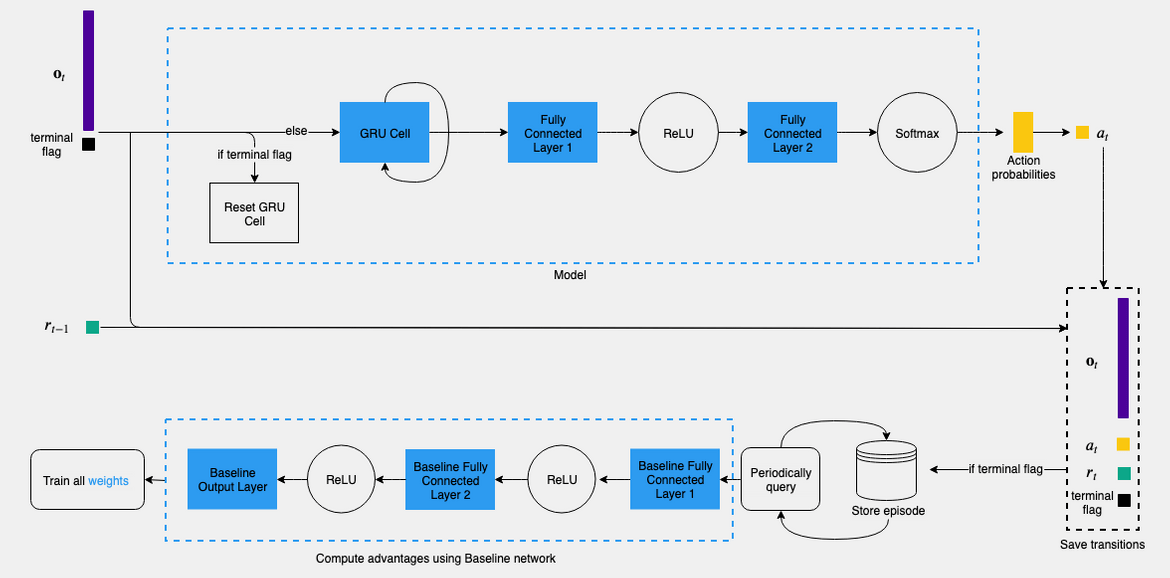

At each step, the agent receives an , the terminal flag and the . If the episode has not terminated, the observation is passed through a , whose output is fed through two layers. The recurrent unit is necessary because the agent receives only a single, previous mel spectrogram frame as part of its observation. Recall that the motivation behind constructing the observation vector as we did was to enable the agent to decipher whether the characters that have been read thus far have been synthesised in the frames of audio generated. In other words, the agent must be able to decipher whether or not the audio generated, corresponds precisely to the characters available to it. However, even from the perspective of a human, a single frame of audio (corresponding to ms) is insufficient to make this decision. Instead, we would use the information present in a sequence of such frames. A recurrent unit enables the agent to learn to retain this sequential information relevant to this process across time steps.

A softmax layer is then used to convert the score to a probability, from which an is either sampled or greedily chosen. The transition is also stored (note that the lags by one time step). If the episode has terminated, the model simply resets the GRU cell and saves the episode. Periodically, the saved episodes are used for training the . However, to reduce the variance, a baseline is subtracted from the returns before using them for training.

We learn the policy parameters using the policy gradient approach and learn the baseline network’s parameters by minimising the squared distance between the sampled returns and returns predicted by the baseline.

Performance

For a thorough quantitative analysis of our model, we refer the reader to our paper. In this post, we instead focus on qualitatively analysing the model’s behaviour.

Comparing the policy path against the learnt alignments illustrates some interesting aspects of our model’s behaviour. Recall that under the standard policy of reading the entire input sentence before synthesising, the alignments look like the figure below.

and the synthesised (vocoded) audio sounds like

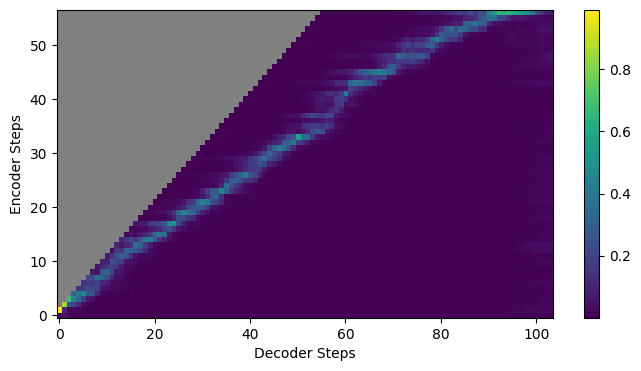

Suppose we now consider a simple, rule based policy of alternating between READ actions and SPEAK actions, i.e., for every one character read, the model attempts to perform one decoder step. If we grey out the ‘unavailable’ (or unread) characters at each decoding step, the alignments would look like the following figure.

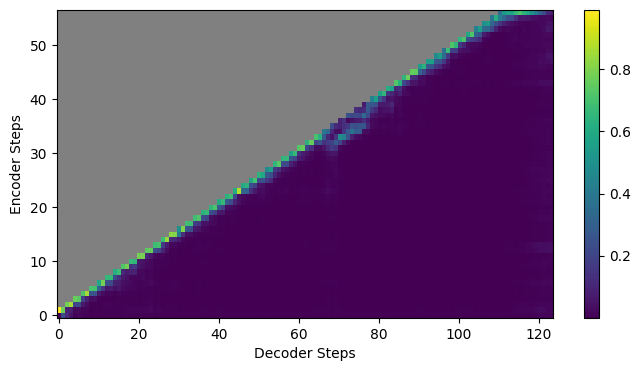

Such a policy clearly incurs a lower latency since the SPEAK actions are interleaved with the READs. However, in the middle portion of the sequence, there still appears to be a significant number of unnecessary READs occurring. Taking this simplistic approach a step further, we could perform a READ action once for every two SPEAK actions, i.e., take two decoder steps for every one character read. The resulting alignments would then look like

This figure clearly shows that the policy path and the prominent alignments diagonal ‘intersect’ in such a scenario. This significantly impacts the quality of the synthesised audio as demonstrated below.

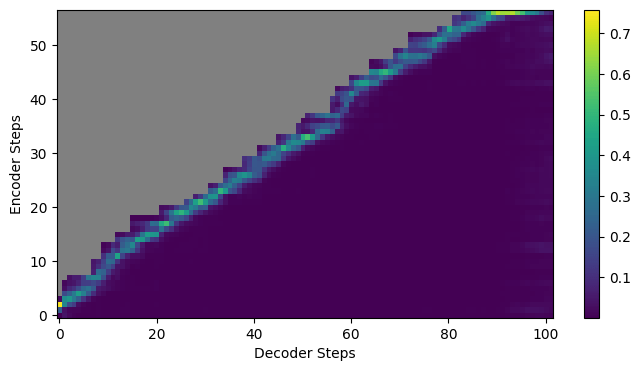

Based on this, it appears that the ‘ideal’ path lies somewhere between these two policies. One might suggest that the desired behaviour would be for the greyed out portion to ‘hug’ the alignments from above. This would reduce the proportion of area under the policy path (and thence, the latency) while still synthesising high quality audio.

If we observe the corresponding plot generated by our learnt model, we observe that it exhibits precisely this behaviour!

The alignments, combined with the synthesised audio quality suggests that the model has in fact learnt to SPEAK only when it has READ a sufficient number of characters, but not too many more.

We provide more examples of this on our samples page along with a similar analysis from a model trained on a French corpus. As an exciting aside, we found that we could use precisely the same set of hyperparameters and overall setup for training each of the two languages, which further underlines the flexibility of our approach!

Babel-ing along

What our method has demonstrated is that for neural sequence-to-sequence, attention-based TTS models, there is no algorithmic barrier to incrementally synthesising speech from text.

There are a number of interesting avenues along which this research can be taken further. One could look at further improving the quality of the synthesised audio by jointly training the Tacotron weights on these sub-sentences, rather than using a Tacotron pre-trained on full sentences. The input to our system is currently characters. One could explore the possibility of using phoneme/word-level inputs. On an engineering front, if such a model were to be used to synthesise text as it was being typed, one could explore how to handle deletions/edits or even pauses.

And perhaps, once these challenges are better explored, we might be just a few steps closer to making Douglas Adams’ mystical babel fish a reality.

-

T. Baumann and D. Schlangen, “The inprotk 2012 release,” in NAACL-HLT Workshop on Future Directions and Needs in the Spoken Dialog Community: Tools and Data. Association for Computational Linguistics, 2012, pp. 29–32.

↩ -

T. Baumann and D. Schlangen, INPROiSS: A component for just-in-time incrementalspeech synthesis,” in Proceedings of the ACL 2012 System Demonstrations. Jeju Island, Korea: Association for Computational Linguistics, Jul. 2012, pp. 103–108. [Online]. Available: https://www.aclweb.org/anthology/P12-3018

↩ -

M. Pouget, T. Hueber, G. Bailly, and T. Baumann, “HMM training strategy for incremental speech synthesis,” in Sixteenth Annual Conference of the International Speech Communication Association, 2015.

↩ -

Y. He, T. N. Sainath, R. Prabhavalkar, I. McGraw, R. Al-varez, D. Zhao, D. Rybach, A. Kannan, Y. Wu, R. Panget al., “Streaming end-to-end speech recognition for mobile devices,” in ICASSP. IEEE, 2019, pp. 6381–6385.

↩ -

Q. Zhang, H. Lu, H. Sak, A. Tripathi, E. McDermott, S. Koo, and S. Kumar, “Transformer transducer: A streamable speech recognition model with transformer encoders and RNN-T loss,” arXivpreprint arXiv:2002.02562, 2020.

↩ -

K. Cho and M. Esipova, “Can neural machine translation dosimultaneous translation?” arXiv preprint arXiv:1606.02012,2016. [Online]. Available: http://arxiv.org/abs/1606.02012

↩ -

J. Gu, G. Neubig, K. Cho, and V. O. Li, “Learning to translate in real-time with neural machine translation, ”arXiv preprint arXiv:1610.00388, 2016.

↩ -

M. Ma, B. Zheng, K. Liu, R. Zheng, H. Liu, K. Peng, K. Church, and L. Huang, “Incremental text-to-speech synthesis with prefix-to-prefix framework,” arXiv preprint arXiv:1911.02750, 2019.

↩ -

J. Shen, R. Pang, R. J. Weiss, M. Schuster, N. Jaitly, Z. Yang, Z. Chen, Y. Zhang, Y. Wang, R. Skerrv-Ryan et al., “Natural TTS synthesis by conditioning WaveNet on mel spectrogram predictions,” in ICASSP. IEEE, 2018, pp. 4779–4783

↩

Subscribe to the blog

Receive all the latest posts right into your inbox